Microservices Architecture: A Beginner’s Guide to Scalable Systems

In today’s fast-paced development landscape, where scalability, agility, and maintainability are key, monolithic applications often fall short. Enter Microservice Architecture, a design pattern that’s transforming how modern applications are built and deployed. In this blog, we’ll explore the core concepts of microservices, understand their benefits, know what to consider while implementing them, and see how individual services communicate. We’ll wrap up with a practical example of two services interacting.

Understanding the Concept: What is Microservice Architecture?

- Microservice Architecture (MSA) is a software design approach where a large application is broken down into smaller, independent services. Each of these services focuses on a specific business functionality and can be developed, deployed, and scaled independently.

- Instead of a tightly coupled structure where everything is bundled together (as in monolithic applications), microservices promote modularity, autonomy, and decentralization.

- Characteristics of Microservices:

- Single responsibility: Each service handles one core business function.

- Independently deployable: Changes in one service do not affect others.

- Technology agnostic: Each service can be built using different languages or databases.

- Resilience: Failure in one service doesn’t bring down the entire system.

- Scalable: You can scale only the services that need more resources.

Why Move to Microservices? The Pitfalls of Monolithic Architectures

- Monolithic applications are easier to build at first, but as they grow, they become difficult to maintain. Let’s break down why microservices are often the better choice:

- Limitations of Monolithic Architecture:

- Difficult to scale: Scaling the entire app for one feature increases cost and complexity.

- Tightly coupled components: A small change can require retesting the whole app.

- Deployment bottlenecks: Deploying new features often delays release cycles.

- Lack of flexibility: Stuck with the same tech stack across all components.

- Benefits of Microservices:

- Faster time to market: Microservices enable faster development cycles by allowing teams to work on independent services concurrently.

- Independent deployments: Each microservice can be deployed independently, reducing downtime and allowing more frequent releases.

- Fault isolation: Microservices isolate failures to specific services, minimizing the impact on the entire system.

- Support for DevOps and CI/CD: Microservices align well with DevOps practices, making automation, continuous integration, and continuous delivery easier.

- Better organization for large teams: Microservices allow large teams to work autonomously on different services, improving collaboration and productivity.

Common Pitfalls in Microservice Development

- Defining Clear Service Boundaries

- Splitting services correctly is hard. Poor boundaries can lead to tightly coupled systems or unnecessary fragmentation.

- ✅ Use Domain-Driven Design (DDD) and Bounded Contexts to define logical separations.

- Managing Inter-Service Communication

- Microservices must communicate reliably, whether synchronously or asynchronously.

- Issues like latency, failures, and message duplication are common.

- ✅ Use HTTP/gRPC for sync calls, message queues for async (RabbitMQ, Kafka), and apply patterns like retries, circuit breakers, and timeouts.

- Handling Distributed Data Consistency

- Each service typically manages its own database, complicating transactions.

- Ensuring consistency across services becomes a challenge.

- ✅ Use eventual consistency strategies, sagas, and outbox patterns.

- Implementing Effective Service Discovery

- Services deployed on cloud or containerized platforms often have dynamic IPs.

- Manually configuring endpoints doesn’t scale.

- ✅ Adopt service discovery tools like Consul, Eureka, or built-in Kubernetes DNS.

- Ensuring Robust Security

- Microservices increase the surface area for attacks.

- You must secure inter-service calls, manage auth, and handle secrets properly.

- ✅ Use mTLS, OAuth2/JWT for secure communication, and tools like HashiCorp Vault for secret management.

- Centralized Monitoring, Logging & Tracing

- Debugging across multiple services is non-trivial. Logs are scattered and request tracing is difficult.

- ✅ Integrate centralized logging (ELK), metrics (Prometheus, Grafana), and distributed tracing (Jaeger, OpenTelemetry).

- CI/CD Pipeline Complexity

- Managing deployment of multiple services and ensuring compatibility between them is complex.

- Rollbacks, canary releases, and version control need automation.

- ✅ Set up CI/CD pipelines using tools like Jenkins, GitHub Actions, ArgoCD, and leverage Docker/Kubernetes.

- API Versioning & Compatibility

- Changes in one service’s API can break consumers.

- Maintaining backward compatibility is critical in live systems.

- ✅ Version APIs (e.g., /api/v1/), follow semantic versioning, and deprecate responsibly.

- Cross-Team Collaboration & Governance

- Multiple teams handling different services may lack coordination or shared conventions.

- This causes misalignment, delays, or duplicated efforts.

- ✅ Enforce API contracts, shared documentation (Swagger/OpenAPI), and strong ownership models.

- Managing Cost and Infrastructure Overhead

- More services mean more instances, infrastructure, and resource consumption.

- Tools for orchestration, scaling, and monitoring are essential but add complexity.

- ✅ Use cloud-native services with autoscaling, and monitor resource usage to optimize costs.

How Microservices Communicate: Synchronous vs Asynchronous

In a microservice architecture, no service operates in a silo. To fulfill a complete business function (like placing an order or processing a payment), multiple microservices often need to collaborate. The effectiveness of your system heavily depends on how these services communicate with each other.

There are two primary communication styles:

- Synchronous Communication

- Synchronous communication is request-response based. The calling service sends a request and waits for the response before proceeding.

- Common Protocols:

- HTTP/REST APIs:

- The most widely used protocol using standard HTTP verbs (GET, POST, PUT, DELETE). It’s simple and human-readable, making it a default choice in many projects.

- gRPC:

- A high-performance, language-agnostic RPC framework built on HTTP/2. It uses Protocol Buffers (protobuf) for data serialization and is ideal for low-latency internal communication between services.

- HTTP/REST APIs:

- ✅ Advantages:

- Easy to understand and implement

- Immediate feedback from the service being called

- Suitable for use cases requiring a direct response (e.g., user authentication)

- ❌ Disadvantages:

- Tightly couples services, if one goes down, others may be blocked

- Introduces latency, especially when chained over multiple calls

- Harder to scale under load due to blocking nature

- Asynchronous Communication

- In asynchronous communication, services communicate by sending messages or publishing events, without waiting for a direct response.

- Common Mechanisms:

- Message Queues (e.g., RabbitMQ, Amazon SQS):

- Messages are pushed into a queue by one service and consumed by another, enabling decoupled processing.

- Event-Driven Architecture (e.g., Kafka, NATS):

- Services publish events (e.g., “OrderPlaced”) and other services subscribe to handle those events independently.

- Message Queues (e.g., RabbitMQ, Amazon SQS):

- ✅ Advantages:

- Services remain loosely coupled — one service going down doesn’t affect others

- Improved scalability and fault tolerance

- Enables eventual consistency across distributed systems

- ❌ Disadvantages:

- Adds complexity in debugging, tracing, and message replay

- No immediate response — not suitable for real-time needs

- Requires handling of message failures and retries

🎯 Best Practice Tip:

- Don’t pick just one, combine both styles based on use-case.

- For example, an order service may synchronously confirm payment, then asynchronously trigger shipping and email services via events.

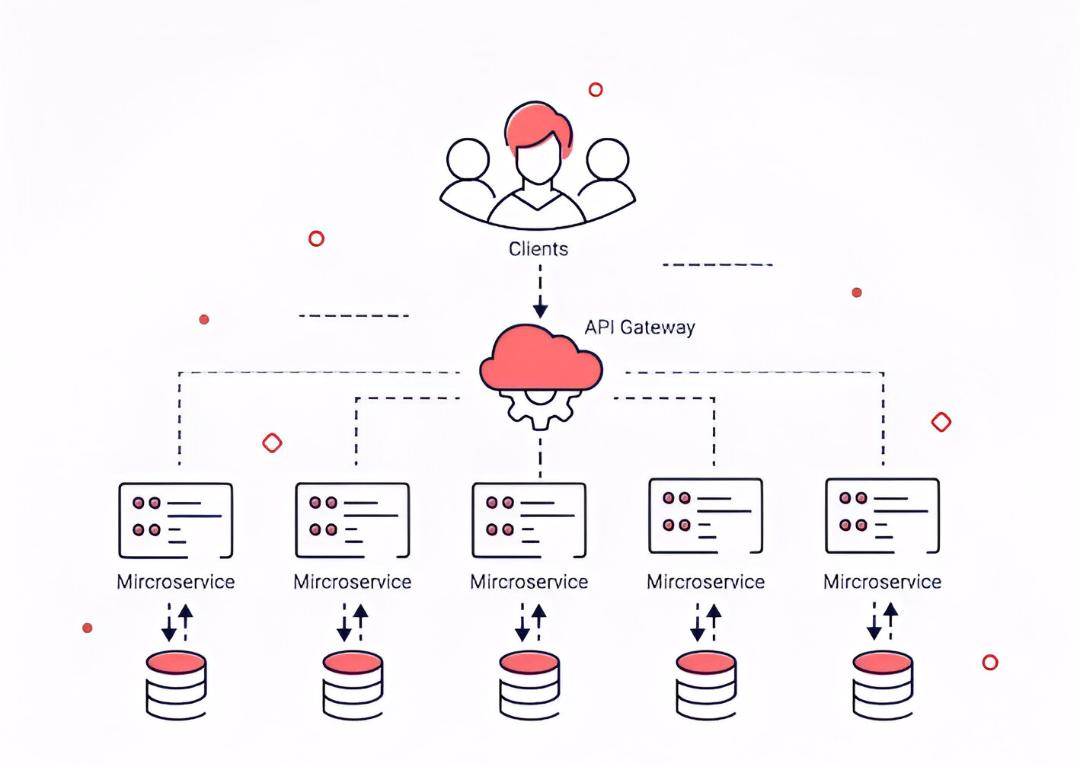

How Clients Interact with Different Microservices: The Role of API Gateways

In a microservice architecture, the client doesn’t directly interact with each individual service. Instead, multiple services often work together to fulfill a client’s request. Managing these interactions efficiently becomes critical, especially as the number of microservices grows. This is where an API Gateway comes into play.

- 🔑 The Role of API Gateways

- An API Gateway is a server that acts as an entry point into the microservices ecosystem.

- It provides a unified API for clients to interact with, abstracting the complexities of multiple services behind a single endpoint.

- 🧰 Key Functions of an API Gateway:

- Request Routing: The API Gateway routes client requests to the appropriate backend services based on the request’s type and URL.

- Load Balancing: It can distribute incoming traffic to different instances of services, ensuring high availability and reliability.

- Security: Provides centralized authentication, authorization, and SSL termination.

- Rate Limiting: Protects services from being overwhelmed by too many requests by limiting the number of requests a client can make within a time window.

- API Aggregation: Sometimes, a client needs data from multiple services. Instead of making multiple calls, the API Gateway aggregates responses and returns them as a single response.

- Monitoring and Logging: Centralized tracking of API usage, request logs, and performance metrics.

- 🌐 Client-API Gateway Interaction Flow

- Here’s how the interaction typically works in a microservice-based application:

- Client Sends Request: The client (a browser, mobile app, etc.) sends a request to the API Gateway.

- API Gateway Handles the Request:

- The API Gateway first authenticates the client (e.g., using OAuth2 tokens or API keys).

- It determines which microservice needs to handle the request based on the path, headers, and parameters.

- Request Routing and Aggregation:

- The API Gateway may call one or more services, aggregating their responses if necessary. For example, an order service and payment service might both be needed to complete a transaction.

- Response to Client: Once the API Gateway receives the responses from the required services, it aggregates them and sends the final response back to the client

- Here’s how the interaction typically works in a microservice-based application:

A Simple Example of Two Microservices

Let’s consider an e-commerce platform with the following two services:

- Order Service

- Responsible for managing customer orders. Exposes an API like:

- POST /createOrder

{

"userId": "101",

"productId": "555",

"quantity": 2

}

2.Inventory Service

- Tracks product stock. Exposes an API like:

- POST /deductStock

{

"productId": "555",

"quantity": 2

}

- Scenario:

- When a customer places an order:

- Order Service receives the request.

- It then makes a REST API call to Inventory Service to deduct stock.

- If inventory is available, the order is confirmed.

- If not, it returns an error.

- Alternatively, using async communication:

- Order Service publishes an event OrderPlaced.

- Inventory Service consumes this event and deducts stock.

- If stock is insufficient, it emits a StockUnavailable event to be handled by the Order Service.

- This approach is decentralized, scalable, and promotes event-driven architecture.

Conclusion

Microservices are a game-changer for modern software, offering speed, scalability, and resilience. But with great power comes great complexity. To master the microservice architecture, you’ll need a sharp focus on design, seamless communication, robust infrastructure, and deep domain expertise. Get it right, and the benefits are limitless!